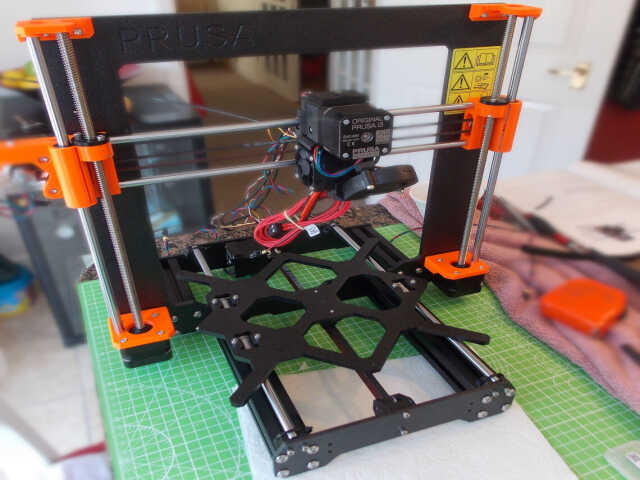

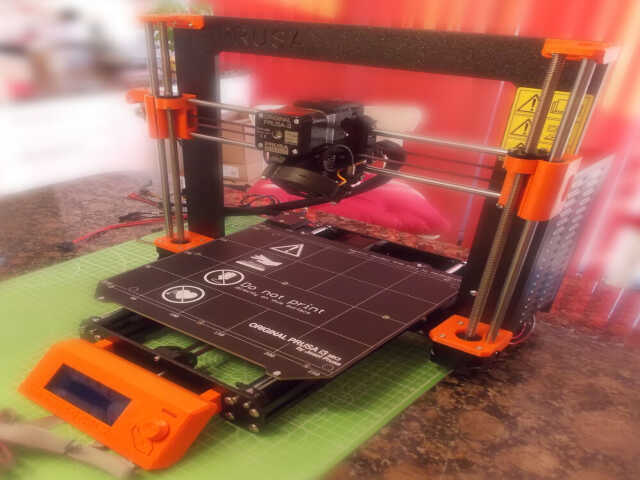

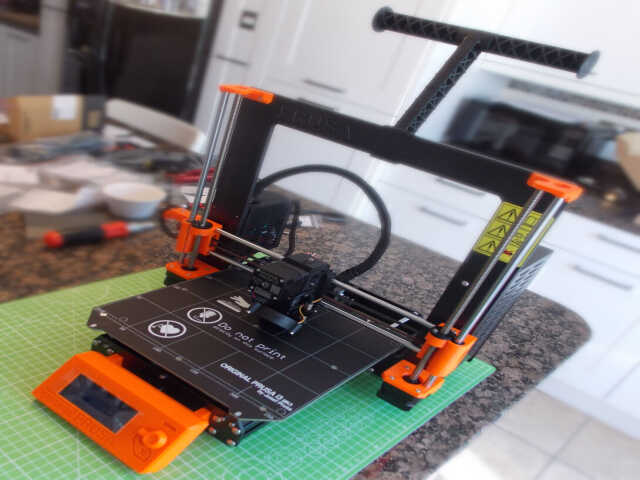

Recently decided to start learning about 3D printing. Ordered a Prusa i3 MK3S kit a few weeks ago, and it arrived a couple of days ago. The packaging and documentation was excellent, and the print results essentially flawless.

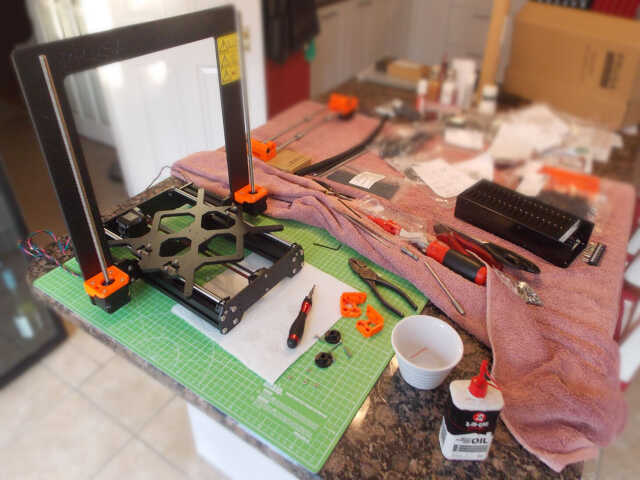

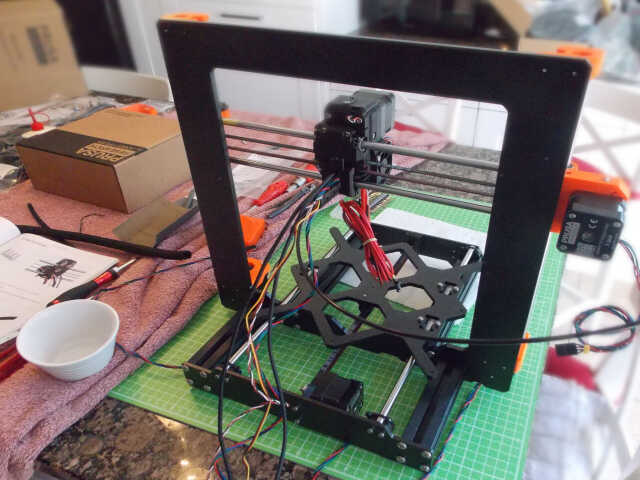

The first step was to assemble the X and Y axes. This basically consisted of bolting together aluminium frame pieces. Care had to be taken to ensure that everything was aligned correctly and that the frame didn't wobble when placed on a flat surface.

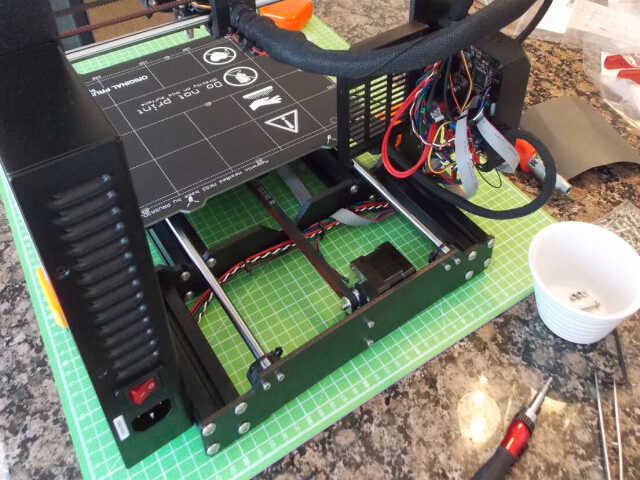

Next, the heated bed carriage had to be attached to rods:

Next, the belt had to be connected to the motor that drives the heated bed carriage:

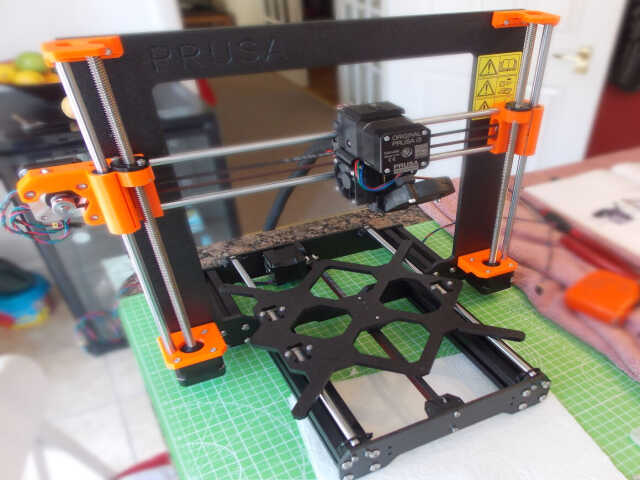

The Z axis motors were then connected to the frame along with the threaded rods that are used to move the extruder on the Z axis:

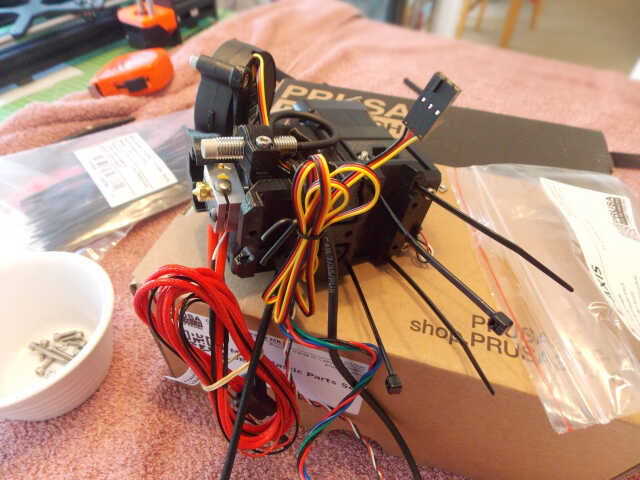

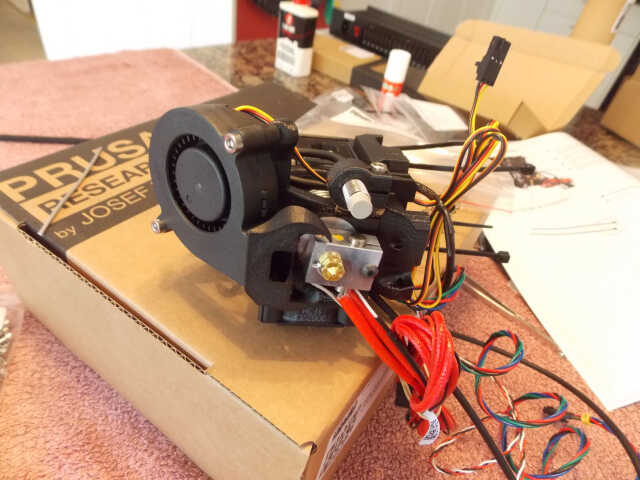

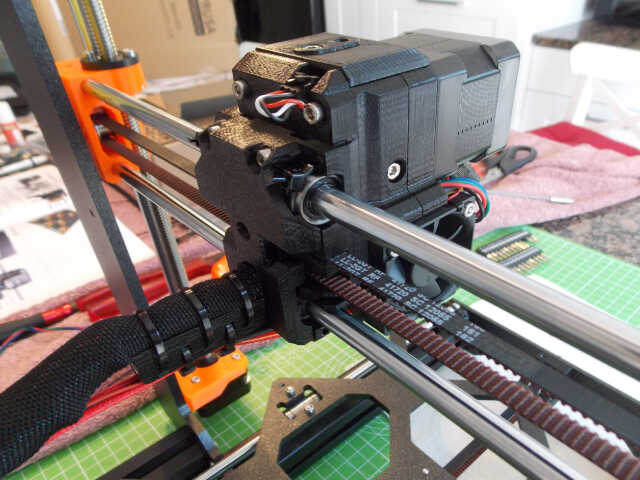

Assembling the extruder took the best part of a day. I took excessive care to heed all of the warnings given in the documentation...

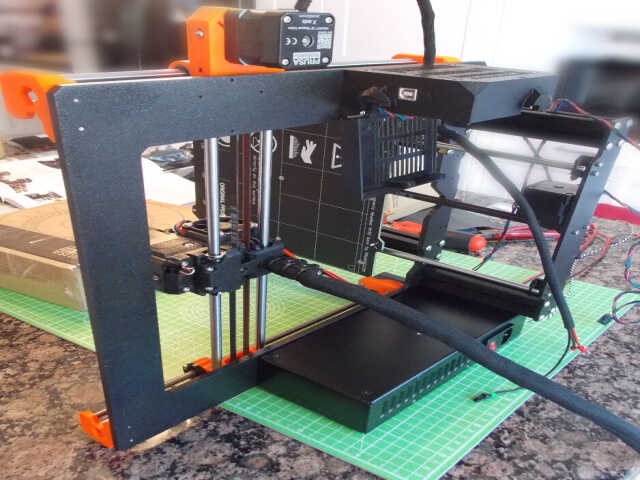

The extruder then had to be mounted, and the various cables inserted into a textile sleeve in order to keep them out of the way during printing:

The LCD panel was mounted on the front of the frame:

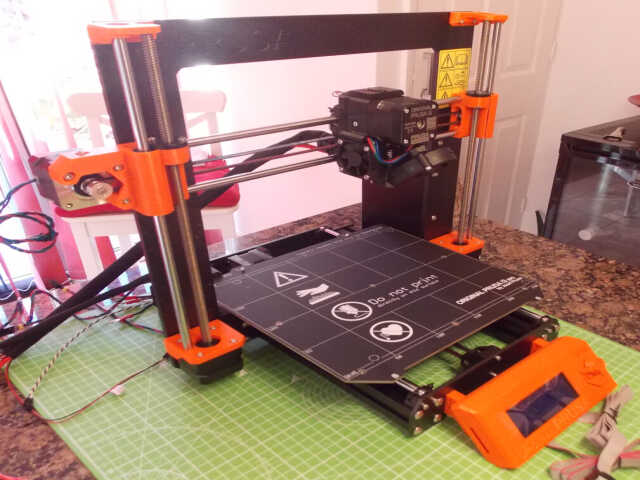

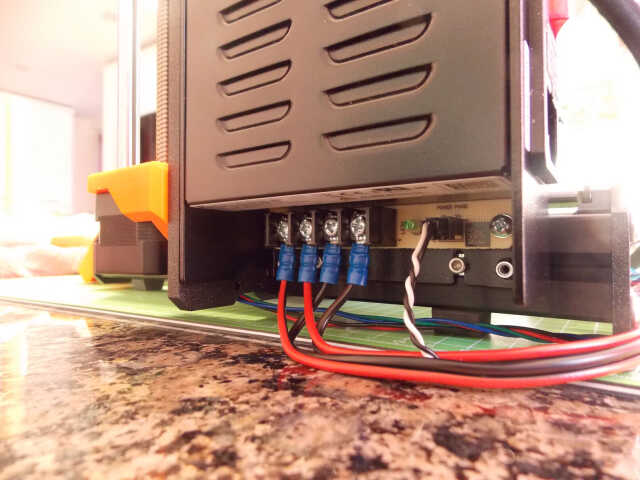

This was then followed by the heatbed and 24v power supply:

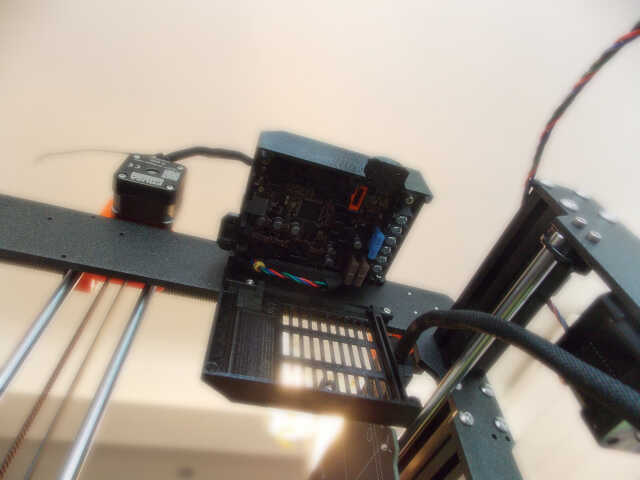

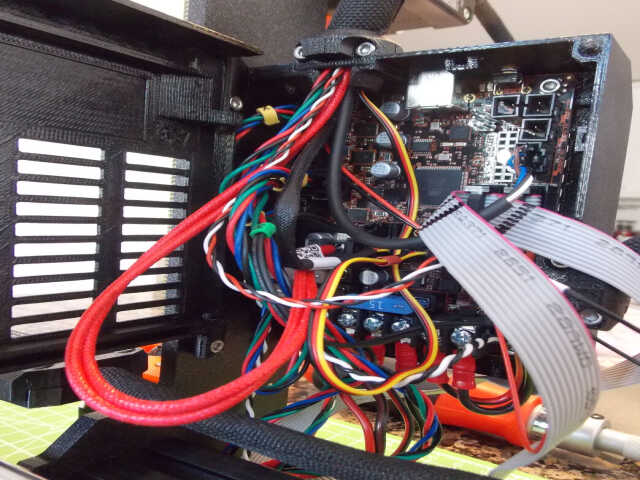

It was then necessary to attach the microcontroller, and attach all of the motors and other cables to it. This was easy enough, and the documentation went into a lot of detail on how to manage the cables in a sane way.

The last step was to attach the filament spool holder.

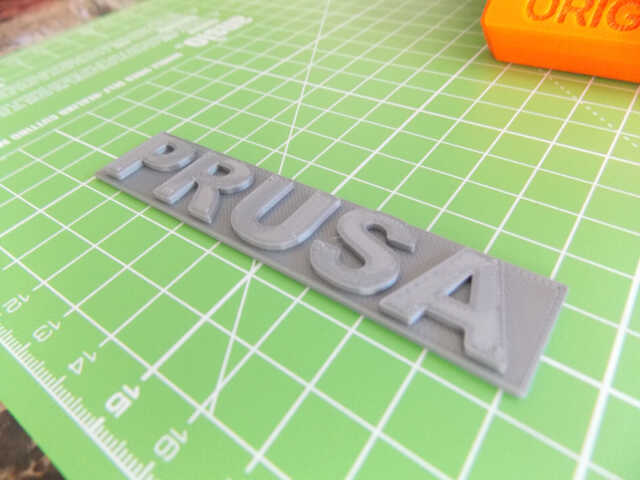

After calibration, the initial PRUSA logo and Pug test print gave good results:

Gave a presentation at the Sonatype User

Conference

back on 2020-08-12 on how the Library

Simplified project uses

Maven Central and the principles of

modularity to develop

and deliver Android components.

I'm considering changing the range of JDKs I support.

Right now, I specify JDK 11 as the target bytecode level. That is,

you require at least JDK 11 to run any code I write. I specify the

range of allowed compiler versions as [11, 14]. That is, you can

build the code on any JDK newer than 11 but older than 15.

I'd like to adjust this a bit to reduce the range of JDKs upon which I test code. Specifically, these would be the new rules:

-

The target bytecode level is

CurrentLTS. That is, you require at least JDKCurrentLTSto run any code I write. -

The set of allowed compiler versions is

[CurrentLTS, CurrentLTS + 1), [Current, Current + 1).

At the time of writing, CurrentLTS = 11 (the next value of CurrentLTS will

be 17), and Current = 14.

So under the new rules, at the time of writing, you could run the

code on JDK 11, 12, 13, and 14. You could build the code

on JDK 11, and any JDK greater than or equal to 14 but less

than 15. This slightly odd-looking range is necessary because JDK

compiler versions contain minor and patch levels such as 14.0.2.

The explicit support statement would be that only JDK versions upon

which the code is regularly built and the test suite executed would

be supported. You could run the code on JDK 12 and 13, but

you'd be on your own there as the code is only built and tested on

11 and 14.

This would reduce the number of CI builds I need to execute on each

commit from at most six (11, 12, 13, 14, 15, 16) to

exactly two (11, 14). With project counts in three figures, the

build counts quickly add up!