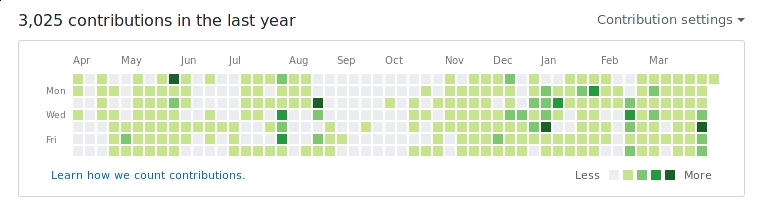

Managed to hit 3000 GitHub contributions today:

Had a change of heart. Doing all of the package renames now rather than waiting for Java 9. I wrote:

There is the possibility that changing the entire name of a project could be considered a non-compatibility-breaking change according to semantic versioning...

I'm choosing to believe this is true and am renaming projects and modules without incrementing the major version number. I'm using japicmp to verify that I'm not introducing binary or source incompatible changes.

Sometimes, what you really need is a mutable, boxed integer.

While updating jcanephora, I

discovered that I needed to update jpra

to use the new jtensors

types. Whilst doing this, I discovered that the new simplified

implementation of the ByteBuffer based storage tensors that

I'd implemented were too simple: The jpra package made use

of the cursor-like API that the old jtensors-bytebuffered

package provided. I'd not provided anything analogous to this

in the new API, so I had to do some rewriting. In the process,

I discovered that the code that jpra generated was using an

AtomicLong value to store the current byte offset value. The

reason it used an AtomicLong value was simply because there was

no mutable, boxed long value in the Java standard library. To

remedy this, I've created a trivial mutable numbers package

upon which the com.io7m.jtensors.storage.bytebuffered and

com.io7m.jpra.runtime.java modules now depend. I should have done

this years ago but didn't, for whatever reason.

https://github.com/io7m/jmutnum

It may be the least interesting software package I've ever written.

Going to start working on moving

jcanephora to jtensors

8.0.0-SNAPSHOT in order to flush

out any problems with jtensors before I try to do a stable 8.0.0

release.

The jtensors implementation

is basically done. I need to release the 1.0.0 version of

the primogenitor,

though, and I can't do this until the 0.10.0 version of

japicmp is released.

I like this sort of pure code because it allows for property-based testing ala QuickCheck. The general idea is to specify mathematical properties of the code abstractly and then check to see if those properties hold concretely for a large set of randomly selected inputs. In the absense of tools to formally prove properties about code, this kind of property-based testing is useful for checking the likelihood that the code is correct. For example, the test suite now has methods such as:

/**

* ∀ v0 v1. add(v0, v1) == add(v1, v0)

*/

@Test

@PercentagePassing

public void testAddCommutative()

{

final Generator<Vector4D> gen = createGenerator();

final Vector4D v0 = gen.next();

final Vector4D v1 = gen.next();

final Vector4D vr0 = Vectors4D.add(v0, v1);

final Vector4D vr1 = Vectors4D.add(v1, v0);

checkAlmostEquals(vr0.x(), vr1.x());

checkAlmostEquals(vr0.y(), vr1.y());

checkAlmostEquals(vr0.z(), vr1.z());

checkAlmostEquals(vr0.w(), vr1.w());

}

Of course, in Haskell this would be somewhat less verbose:

quickCheck (\(v0, v1) -> almostEquals (add v0 v1) (add v1 v0))

The @PercentagePassing annotation marks the test as being executed

2000 times (by default) with at least 95% (by default) of the

executions being required to pass in order for the test to pass as

a whole. The reason that the percentage isn't 100% is due to numerical

imprecision: The nature of floating point

numbers means that it's really only practical to try to determine if

two numbers are equal to each other within an acceptable margin of

error. Small (acceptable) errors can creep in during intermediate

calculations such that if the two results were to be compared for

exact equality, the tests would almost always fail. Sometimes, the

errors are large enough that although the results are "correct", they

fall outside of the acceptable range of error for the almost equals

check to succeed.

There's a classic (and pretty mathematically intense) paper on this called "What Every Computer Scientist Should Know About Floating-Point Arithmetic". This was given an extensive treatment by Bruce Dawson and his explanations formed the basis for my jequality package. I actually tried to use junit's built-in floating point comparison assertions for the test suite at first, but they turned out to be way too unreliable.

Update: Without even an hour having passed since this post was published, japicmp 0.10.0 has been released!