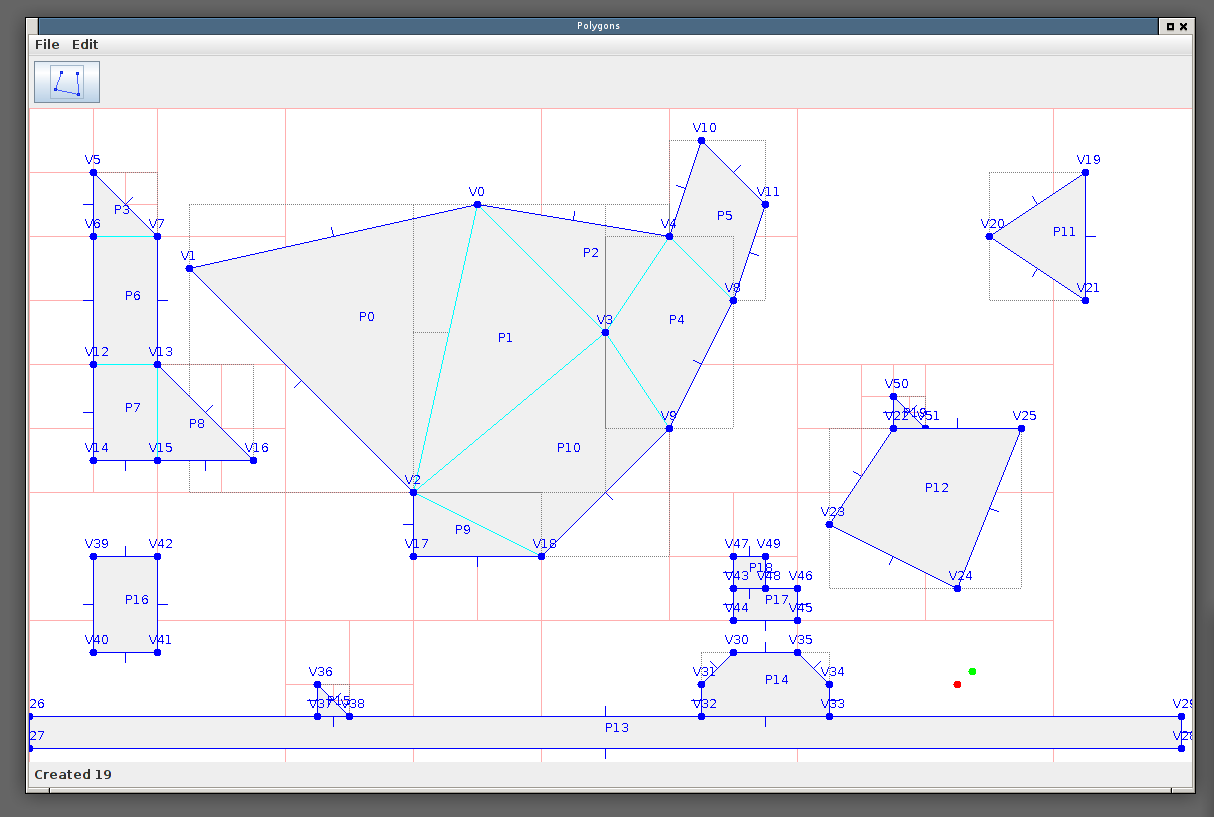

Experimenting with a room model for the engine.

Made some corrections to zeptoblog to ensure that the output is valid XHTML 1.0 Strict. I bring this up because it directly affects this blog. I'm now validating the output of this blog against the XHTML 1.0 Strict XSD schema, so any problems of this type should be caught immediately in future.

I released version 1.0.0 of

jregions a while back and then

found that I wanted to make some changes to the API. I didn't want

to make a compatibility-breaking change this close to 1.0.0, so I

decided to make the changes but keep some deprecated compatibility

methods in place.

However, some of the types involved are generated by the

immutables package. The way the package works

is that you define an abstract interface

type and immutables generates an immutable implementation of

this interface. One of the parts of the generated implementation

is a builder type that allows you to construct instances of the

implementation type in multiple steps. For example, a declaration

like this:

@Value.Immutable

interface SizeType

{

int width();

int height();

}

... would result in the generation of an immutable Size class that

contained a mutable Size.Builder type capable of constructing values

of Size:

Size s = Size.builder().setWidth(640).setHeight(480).build();

In my case, I wanted to rename the width and height methods to

something more generic. Specifically, width should be sizeX and

height should be sizeY. Clearly, if I just renamed the methods

in the SizeType, then the generated type and the generated builder

type would both be source and binary incompatible. I could do this:

@Value.Immutable

interface SizeType

{

int sizeX();

int sizeY();

@Deprecated

default int width()

{

return sizeX();

}

@Deprecated

default int height()

{

return sizeY();

}

}

That would at least preserve source and binary compatibility for

the API of the generated type, but the generated builder type

would no longer have setWidth or setHeight methods, so

source and binary compatibility would be broken there. I asked

on the immutables.org issue tracker, and right away Eugene

Lukash stepped in with a nice

solution. Thanks

again Eugene!

Switch to the project directory, and:

$ find . -name '*.iml' -exec rm -v {} \;

$ rm -rfv .idea

Reopen the project and hope intensely.

PulseAudio has some problems.

I have a laptop and various machines for testing software across platforms, and they all send audio over the network to my main development machine. This allows me to use a single pair of headphones and to control audio levels in a single place. I'm using PulseAudio's networking support to achieve this but, unfortunately, it seems rather poor at it.

The first major problem with it is that when the

TCP

connection between the client and the server is broken for any reason,

the only way to get that connection back appears to be to restart

the client. This is pretty terrible; network connections are not

reliable and any well-written networked software should be designed

to be resilient in the case of bad network conditions. Simply

retrying the connection with exponential backoff would help,

possibly with an explicit means to reconnect via the pactl

command line tool. As an aside, the use of TCP is probably not a

great choice either. Software that streams audio has soft real-time

requirements and

TCP is pretty widely acknowledged as being unsuitable for satisfying

those requirements. An application such as an audio server is receiving

packets of audio data and writing them to the audio hardware as quickly

as it can. The audio data is time critical: If a packet of audio

is lost or turns up late, then that is going to result in an audible

gap or glitch in the produced sound no matter what happens. Therefore,

an algorithm like TCP that will automatically buffer data when packets

are reordered, and will automatically re-send data when packets are

lost, is fundamentally unsuitable for use in this scenario. Best to use

an unreliable transport like UDP,

consider lost or late packets as lost, and just live with the momentary

audio glitch. The next piece of audio will be arriving shortly anyway!

Ironically, the use of an unreliable transport would seem to make

the software more reliable by eliminating the problem of having to

supervise and maintain a connection to the server as sending data

over UDP is effectively fire and forget.

The second major problem, and I'm suspicious (without good evidence) that this may be related to the choice of TCP as a protocol, is that the client and server can become somehow desynchronized requiring both the client and server to be restarted. Essentially, what happens is that when either the client or server are placed under heavy load, audio (understandably) begins to glitch. The problem is that even when the load returns to normal, audio remains broken. I've not been able to capture a recording of what happens, but it sounds a little like granular synthesis. As mentioned, the only way to fix this appears to be to restart both the client and server. A broken client can break the server, and a broken server can break the client!