There's a lot of literature on filtering pixel art in modern rendering engines. The basic issue is that we want to use nearest-neighbour filtering in order to preserve the sharp, high-contrast look of pixel art, but doing so introduces an annoying shimmering effect when the images are scaled and/or rotated. The effect is almost impossible to describe in text, so here's a video example:

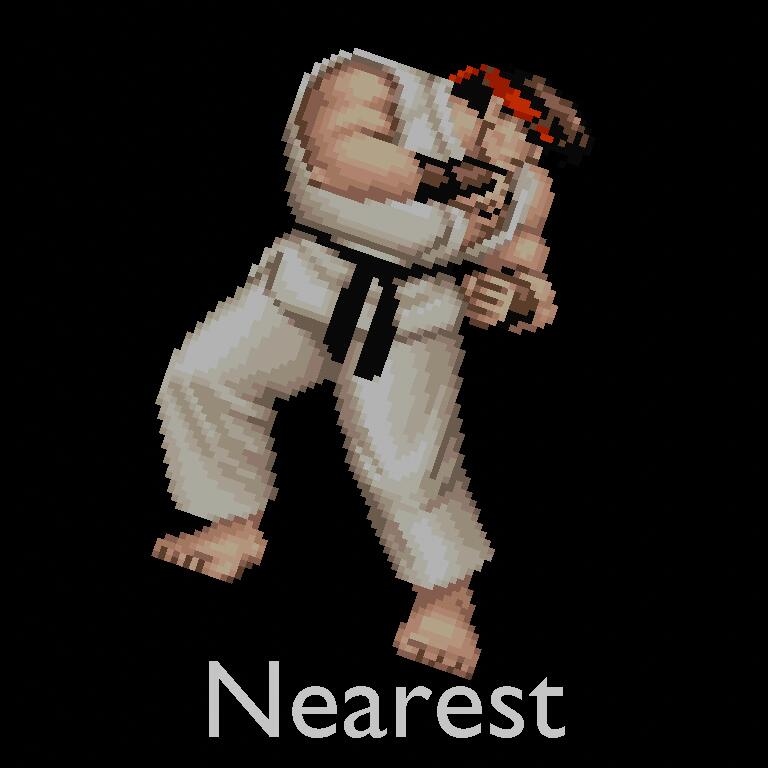

The issue can be seen in a still image if you know what to look for. Here's the frame of the video where the character is rotated:

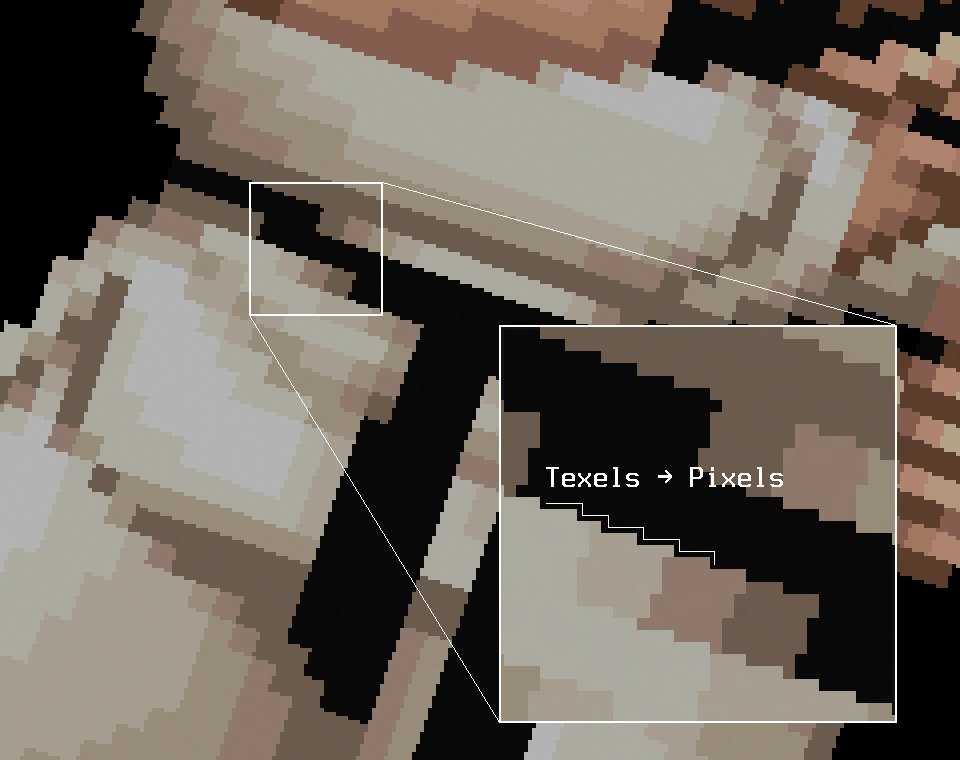

Zooming in:

The basic issue is that, as the texture is scaled up in order to be displayed in a large area onscreen, the individual texels that make up the texture are mapped to large ranges of the small pixels that make up the screen. As the edges formed by the texels rotate, a clear stepping pattern emerges that changes from frame to frame as the image rotates.

One way to combat this shimmering effect is to use bilinear filtering instead of nearest-neighbour filtering. This eliminates the shimmering effect entirely, but also obliterates the nice sharp aesthetics of the original pixel art:

As mentioned in the article above, it's possible to write GLSL shaders (or code in your shading language of choice) to implement so-called fat pixels.

Essentially, the sampling of texels from a texture is performed using bilinear filtering, but the actual texture coordinates used to sample from the texture are subtly adjusted such that the actual filtering only occurs on the very edges of texel boundaries. This is the best of both worlds: The edges of texels are filtered to eliminate shimmering, but the image remains sharp and is not blurred.

Example code taken from the article to implement this is as follows:

vec2 uv_iq( vec2 uv, ivec2 texture_size ) {

vec2 pixel = uv * texture_size;

vec2 seam = floor(pixel + 0.5);

vec2 dudv = fwidth(pixel);

pixel = seam + clamp( (pixel - seam) / dudv, -0.5, 0.5);

return pixel / texture_size;

}

It's not important to understand exactly what the code is doing here, but

just pay special attention to the fact that the code uses

partial derivatives

indirectly via the use of the GLSL fwidth() function. The code also has

access to the size of the texture in pixels (the texture_size parameter).

I've implemented this same kind of code many times in GLSL. It's low cost, it's largely scene-independent requiring no artist-configured tuning, and it works without issue.

I now want to implement this same algorithm for use as a shader in Blender. How difficult can it be?

Unfortunately, it turns out that, like most things in Blender, the wheels fall off as soon as you give them the slightest poke.

Blender doesn't expose texture size information in rendering nodes, despite

clearly having access to it internally. This means that you have to customize

each shader you have to work with whatever textures you have assigned. Blender

also doesn't expose clamp(), necessitating writing it by hand. This is easy,

but it's another mild annoyance to add to the pile. Blender doesn't expose

partial derivatives in any form. I couldn't find a reasonable alternative.

Last of all, you're forced to do all of this in an excruciating node/graph

based "programming" environment because Blender doesn't expose any kind of

text-based programming. This means that five lines of straightforward

GLSL code become a rat's nest of absurd graph nodes. This is ostensibly done

because apparently artists can't handle looking at a few lines of extremely

basic code, but they can somehow handle looking at an insane spiderweb of

graph nodes that utterly obfuscate whatever the original intention behind the

code was.

I admit defeat. This was the closet approximation I was able to get to fat pixel sampling:

The result manages to combine both blurriness and shimmering, providing the worst possible results.

Wishing a case of treatment-resistant haemorrhoids on all designers of node-based programming environments everywhere, I took a step back and wondered if there was a dumber solution to the problem.

The idea occurred to me that the issue is caused by scaling upwards: A single texel is sampled and repeated over a number of screen pixels, and it's ultimately this that causes the problem. What if, instead, we somehow scaled downwards?

I took the original 59x90 pixel art image, scaled it up to 944x1440, and

then rendered using straightforward bilinear filtering. The result:

Shimmering is almost entirely eliminated, and the sharp appearance is preserved. I suspect the effect is proportional to the degree of scaling; as long as the sampled texture is larger than it will appear onscreen, things work out. You wouldn't want to do this in a game engine or other realtime engine; noone wants to use up all of their precious texture memory on a few pixel art sprites. In an actual rendering engine, however, you would write the five or so lines of shader code and forget about it, and not have to deal with any of this.

Sometimes the dumbest solution is the best and only solution.

Blend file: fatPixels.blend