In a previous episode, I moved the contents of a server into a new SC-316 case.

While the case seems to be of generally good quality, mine has turned out to have a serious fault. The case's drive bays are implemented in terms of four mounted PCB backplanes, each with its own power connector and SAS connector. Each backplane is individually powered via a standard completely awful AMP Mate-n-Lok 1-480424-0 Power connector.

Anyone who's dealt with these connectors knows how bad they are. They're next to impossible to plug in, and next to impossible to unplug once plugged in. To quote that Wikipedia page:

Despite its widespread adoption, the connector does have problems. It is difficult to remove because it is held in place by friction instead of a latch, and some poorly constructed connectors may have one or more pins detach from the connector during mating or de-mating. There is also a tendency for the loosely inserted pins on the male connector to skew out of alignment. The female sockets can spread, making the connection imperfect and subject to arcing. Standard practice is to check for any sign of blackening or browning on the white plastic shell, which would indicate the need to replace the arcing connector. In extreme cases the whole connector can melt due to the heat from arcing.

To summarize:

- The connector is difficult to plug in due to a friction fit.

- The connector is difficult to unplug due to a friction fit.

- The pins can be bent and damaged should the user do something as completely unforgivable as to plug in the connector.

- The pins of the connector are subject to arcing, risking a fire.

And yet, somehow, we're fitting this ridiculous 1960s relic onto modern power supplies, despite far better connectors being available such as, frankly, any of the 15A and above rated JST connectors.

Back to the story. I connected the power supply to the top two backplanes, leaving the bottom two unpowered. I didn't have SAS connections for those backplanes anyway, I really only needed a 3U case because it was going to need to contain a full-height PCI card, and there wasn't a reasonably priced 3U case that didn't also come with 16 drive bays.

I placed some disks in the second row of bays. The lights came on, the disks were accessible, no issues.

I placed a disk in the top row of bays... Nothing. I tried a disk in a different bay on the same row... Nothing.

I shut the machine down, dragged the machine out of the rack, opened it, and took a look at the backplanes. Nothing appeared to be wrong until I wiggled the power connector for the top backplane to check that it was correctly plugged in.

Uh oh.

The entire power connector came off in my hand.

It turns out that the power connectors are not through-hole soldered connectors. They're rather flimsy plastic connectors that are screwed onto the board. You can see from the image that there are two thin plastic ears on the connector, and the ears have simply ripped off. Real AMP Mate-n-Lok connectors are typically made from nylon - these connectors do not feel like nylon, and I don't think nylon would have torn this easily.

I inspected the rest of the connectors and discovered that the bottom connector (that I'd not even touched) was also cracked on one side:

This effectively leaves only two of four sets of drive bays functional.

I'm waiting to hear back from ServerCase support. This will be the third time I've had to use their support, and they have been excellent every time, but I'm not looking forward to the possibility of having to take everything out of the case and sending the case back.

My ideal outcome for this is that they send me new backplanes with real connectors on them, properly soldered onto the board. I realize that's unlikely. I've already been looking at replacement connectors that I can solder onto the boards myself. There are some connectors from TE Connectivity that look like they would fit.

I have an idiotic number of GitHub repositories. There are, at the time of

writing, 536 repositories in my account, of which I'm the "source" for

454 of those. At last count, I'm maintaining 159 of my own projects,

plus less-than-a-hundred-but-more-than-ten open source and closed source

projects for third parties.

When I registered on GitHub back in something like 2011, there was no concept of an "organization". People had personal accounts and that was that.

GitHub exposes many configuration methods that can be applied on a per-organization basis. For example, if a CI process requires access to secrets, those secrets can be configured in a single place and shared across all builds in an organization. If the same CI process is used in a personal account, the secrets it uses have to be configured in every repository that use the CI process. This is obviously unmaintainable.

I've decided to finally set up an organization, and I'll be transferring actively maintained ongoing projects there.

I recently moved the components of an existing server into a new SC-316 case. I ordered a Seasonic SS-600H2U power supply to replace the somewhat elderly Seasonic ATX power supply that was in the old case.

Unfortunately, when I turned on the power supply for the first time, I was greeted with an angry vacuum cleaner sound that suggested bad fan bearings.

See the following video:

I filed a support request with ServerCase, sent them the video, and they wordlessly sent me a new power supply without even asking for the old one back. Thanks very much!

Edit: https://github.com/io7m/com.io7m.visual.comfyui.wideoutpaint

One of the acknowledged limitations with

Stable Diffusion

is that the underlying models used for image generation have a definite

preferred size of images. For example, the Stable Diffusion 1.5 model prefers

to generate images that are 512x512 pixels in size. SDXL, on the other hand,

prefers to generate images that are 1024x1024 pixels in size. Models will

typically also allow for some factors of the width or height values to be

used, as long as the product of the dimensions equals the same number of

resulting pixels as the "native" image size. For example, there's a list of

"permitted" dimensions that will work for SDXL: 1024x1024, 1152x896,

1216x832, and so on. The products of each of these pairs of dimensions always

equals 1048576.

This is all fine, except that often we want to generate images larger than the native size. There are methods such as model-based upscaling (Real-ESRGAN and SwinIR being the current favourites), but these don't really result in more detail being created in the image, they more or less just sharpen lines and give definition to forms.

It would obviously be preferable if we could just ask Stable Diffusion to generate larger images in the first place. Unfortunately, if we ask any of the models to generate images larger than their native image size, the results are often problematic, to say the least. Here's a prompt:

high tech office courtyard, spring, mexico, sharp focus, fine detail

From Stable Diffusion 1.5 using the

Realistic Vision

checkpoint, this yields the following image at 512x512 resolution:

That's fine. There's nothing really wrong with it. But what happens if we

want the same image but at 1536x512 instead? Here's the exact same sampling

settings and prompt, but with the image size set to 1536x512:

It's clearly not just the same image but wider. It's an entirely different location. Worse, specifying large initial image sizes like this can lead to subject duplication depending on the prompt. Here's another prompt:

woman sitting in office, spain, business suit, sharp focus, fine detail

Now here's the exact same prompt and sampling setup, but at 1536x512:

We get subject duplication.

Outpainting

It turns out that outpainting can be used quite successfully to generate images much larger than the native model image size, and can avoid problems such as subject duplication.

The process of outpainting is merely a special case of the process of inpainting. For inpainting, we mark a region of an image with a mask and instruct Stable Diffusion to regenerate the contents of that region without affecting anything outside the region. For outpainting, we simply pad the edges of an existing image to make it larger, and mark those padded regions with a mask for inpainting.

The basic approach is to select a desired output size such that the dimensions

are a multiple of the native image dimension size for the model being used.

For example, I'll use 1536x512: Three times the width of the Stable Diffusion

1.5 native size.

The workflow is as follows:

-

Generate a

512x512starting imageC. This is the "center" image.

-

Pad

Crightwards by512pixels, yielding a padded imageD.

-

Feed

Dinto an inpainting sampling stage. This will fill in the padded area using contextual information fromC, without rewriting any of the original image content fromC. This will yield an imageE.

-

Pad

Eleftwards by512pixels, yielding a padded imageF.

-

Feed

Finto an inpainting sampling stage. This will fill in the padded area using contextual information fromE, without rewriting any of the original image content fromE. This will yield an imageG.

-

Optionally, run

Gthrough another sampling step set to resample the entire image at a low denoising value. This can help bring out fine details, and can correct any visible seams between image regions that might have been created by the above steps.

By breaking the generation of the full size image into separate steps, we can apply different prompts to different sections of the image and effectively avoid even the possibility of subject duplication. For example, let's try this prompt again:

woman sitting in office, spain, business suit, sharp focus, fine detail

We'll use this prompt for the center image, but we'll adjust the prompt for the left and right images:

office, spain, sharp focus, fine detail

We magically obtain the following image:

ComfyUI

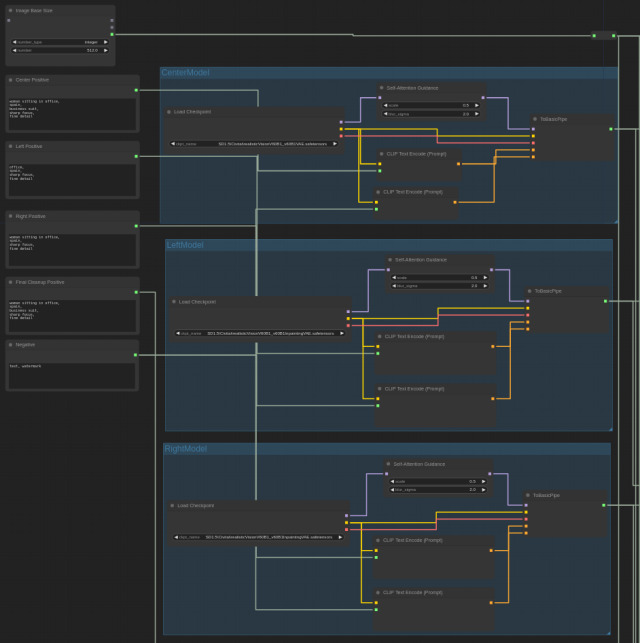

I've included a ComfyUI workflow that implements the above steps. Execution should be assumed to proceed downwards and rightwards. Execution starts at the top left.

The initial groups of nodes at the top left allow for specifying a base image size, prompts for each image region, and the checkpoints used for image generation. Note that the checkpoint for the center image must be a non-inpainting model, whilst the left and right image checkpoints must be inpainting models. ComfyUI will fail with a nonsensical error at generation time if the wrong kinds of checkpoints are used.

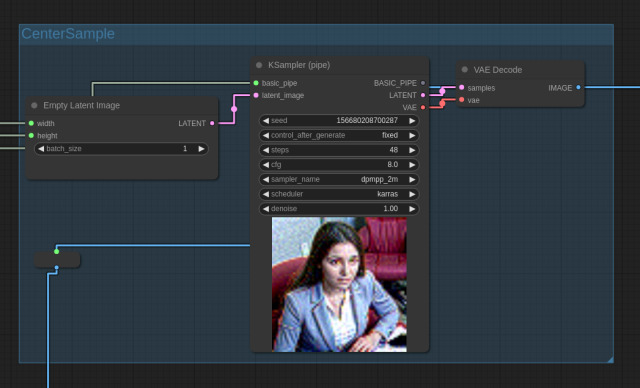

Execution proceeds to the CenterSample stage which, unsurprisingly, generates

the center image:

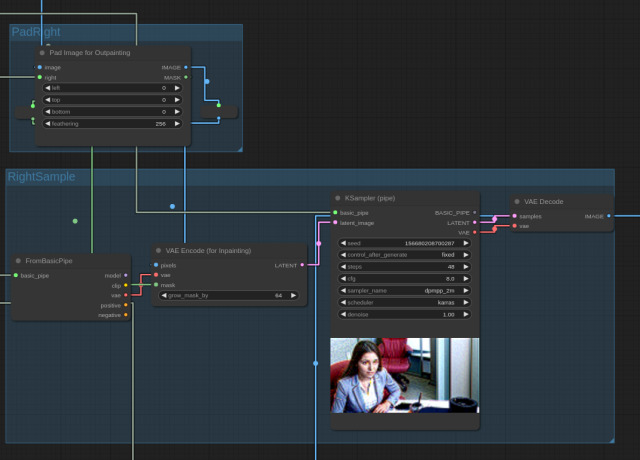

Execution then proceeds to the PadRight and RightSample stages, which

pad the image rightwards and then produce the rightmost image, respectively:

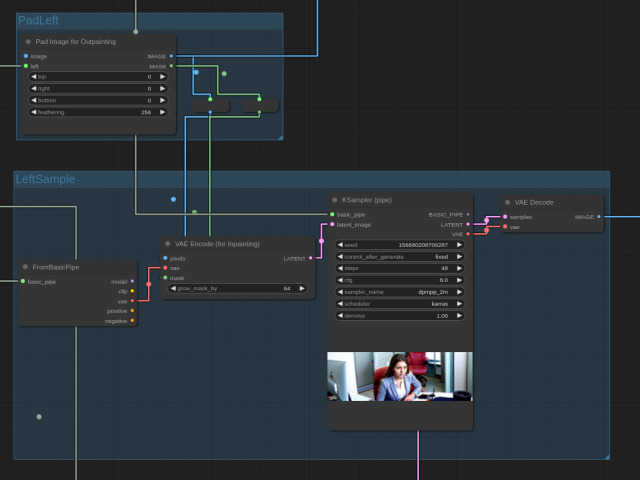

Execution then proceeds to the PadLeft and LeftSample stages, which

pad the image leftwards and then produce the leftmost image, respectively:

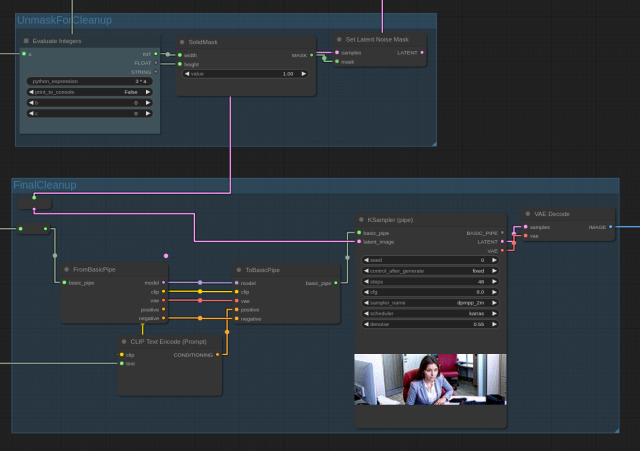

Execution then proceeds to a FinalCleanup stage that runs a ~50% denoising

pass over the entire image using a non-inpainting checkpoint. As mentioned,

this can fine-tune details in the image, and eliminate any potential visual

seams. Note that we must use a Set Latent Noise Mask node; the latent image

will be arriving from the previous LeftSample stage with a mask that

restricts denoising to the region covered by the leftmost image. If we want

to denoise the entire image, we must reset this mask to one that covers the

entire image.

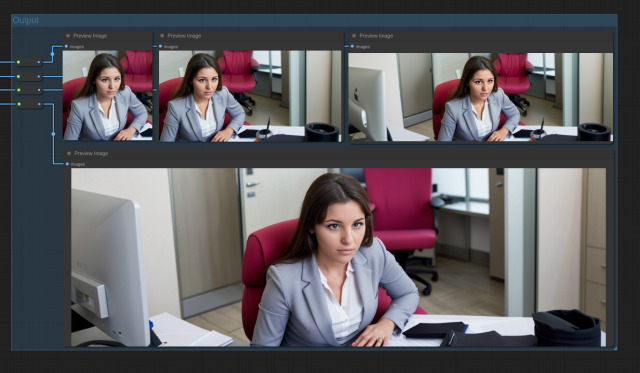

The output stage displays the preview images for each step of the process:

Mostly a post just to remind my future self.

I run a Forgejo installation locally. I'd been

seeing the following error on trying to git push to some repositories:

$ git push --all Enumerating objects: 12, done. Counting objects: 100% (12/12), done. Delta compression using up to 12 threads Compressing objects: 100% (8/8), done. send-pack: unexpected disconnect while reading sideband packet Writing objects: 100% (8/8), 3.00 MiB | 9.56 MiB/s, done. Total 8 (delta 4), reused 0 (delta 0), pack-reused 0 (from 0) fatal: the remote end hung up unexpectedly Everything up-to-date

The forgejo server is running in a container behind an

nginx proxy.

The logs for nginx showed every request returning a 200 status code, so

that was no help.

The logs for forgejo showed:

podman[1712113]: 2024/04/13 09:36:59 ...eb/routing/logger.go:102:func1() [I] router: completed GET /git/example.git/info/refs?service=git-receive-pack for 10.0.2.100:0, 401 Unauthorized in 1.0ms @ repo/githttp.go:532(repo.GetInfoRefs) forge01[1712171]: 2024/04/13 09:36:59 ...eb/routing/logger.go:102:func1() [I] router: completed GET /git/example.git/info/refs?service=git-receive-pack for 10.0.2.100:0, 401 Unauthorized in 1.0ms @ repo/githttp.go:532(repo.GetInfoRefs) forge01[1712171]: 2024/04/13 09:36:59 ...eb/routing/logger.go:102:func1() [I] router: completed GET /git/example.git/info/refs?service=git-receive-pack for 10.0.2.100:0, 200 OK in 145.1ms @ repo/githttp.go:532(repo.GetInfoRefs) podman[1712113]: 2024/04/13 09:36:59 ...eb/routing/logger.go:102:func1() [I] router: completed GET /git/example.git/info/refs?service=git-receive-pack for 10.0.2.100:0, 200 OK in 145.1ms @ repo/githttp.go:532(repo.GetInfoRefs) forge01[1712171]: 2024/04/13 09:36:59 ...eb/routing/logger.go:102:func1() [I] router: completed POST /git/example.git/git-receive-pack for 10.0.2.100:0, 0 in 143.8ms @ repo/githttp.go:500(repo.ServiceReceivePack) podman[1712113]: 2024/04/13 09:36:59 ...eb/routing/logger.go:102:func1() [I] router: completed POST /git/example.git/git-receive-pack for 10.0.2.100:0, 0 in 143.8ms @ repo/githttp.go:500(repo.ServiceReceivePack) forge01[1712171]: 2024/04/13 09:36:59 .../web/repo/githttp.go:485:serviceRPC() [E] Fail to serve RPC(receive-pack) in /var/lib/gitea/git/repositories/git/example.git: exit status 128 - fatal: the remote end hung up unexpectedly forge01[1712171]: 2024/04/13 09:36:59 ...eb/routing/logger.go:102:func1() [I] router: completed POST /git/example.git/git-receive-pack for 10.0.2.100:0, 0 in 116.1ms @ repo/githttp.go:500(repo.ServiceReceivePack) podman[1712113]: 2024/04/13 09:36:59 .../web/repo/githttp.go:485:serviceRPC() [E] Fail to serve RPC(receive-pack) in /var/lib/gitea/git/repositories/git/example.git: exit status 128 - fatal: the remote end hung up unexpectedly podman[1712113]: 2024/04/13 09:36:59 ...eb/routing/logger.go:102:func1() [I] router: completed POST /git/example.git/git-receive-pack for 10.0.2.100:0, 0 in 116.1ms @ repo/githttp.go:500(repo.ServiceReceivePack)

Less than helpful, but it does seem to place blame on the client.

Apparently, what I needed was:

$ git config http.postBuffer 157286400

After doing that, everything magically worked:

$ git push Enumerating objects: 12, done. Counting objects: 100% (12/12), done. Delta compression using up to 12 threads Compressing objects: 100% (8/8), done. Writing objects: 100% (8/8), 3.00 MiB | 153.50 MiB/s, done. Total 8 (delta 4), reused 0 (delta 0), pack-reused 0 (from 0) remote: . Processing 1 references remote: Processed 1 references in total To https://forgejo/git/example.git bf6389..5f216b master -> master

The git documentation says:

http.postBuffer

Maximum size in bytes of the buffer used by smart HTTP transports when POSTing data to the remote system. For requests larger than this buffer size, HTTP/1.1 and Transfer-Encoding: chunked is used to avoid creating a massive pack file locally. Default is 1 MiB, which is sufficient for most requests.

I don't know why this fixes the problem.